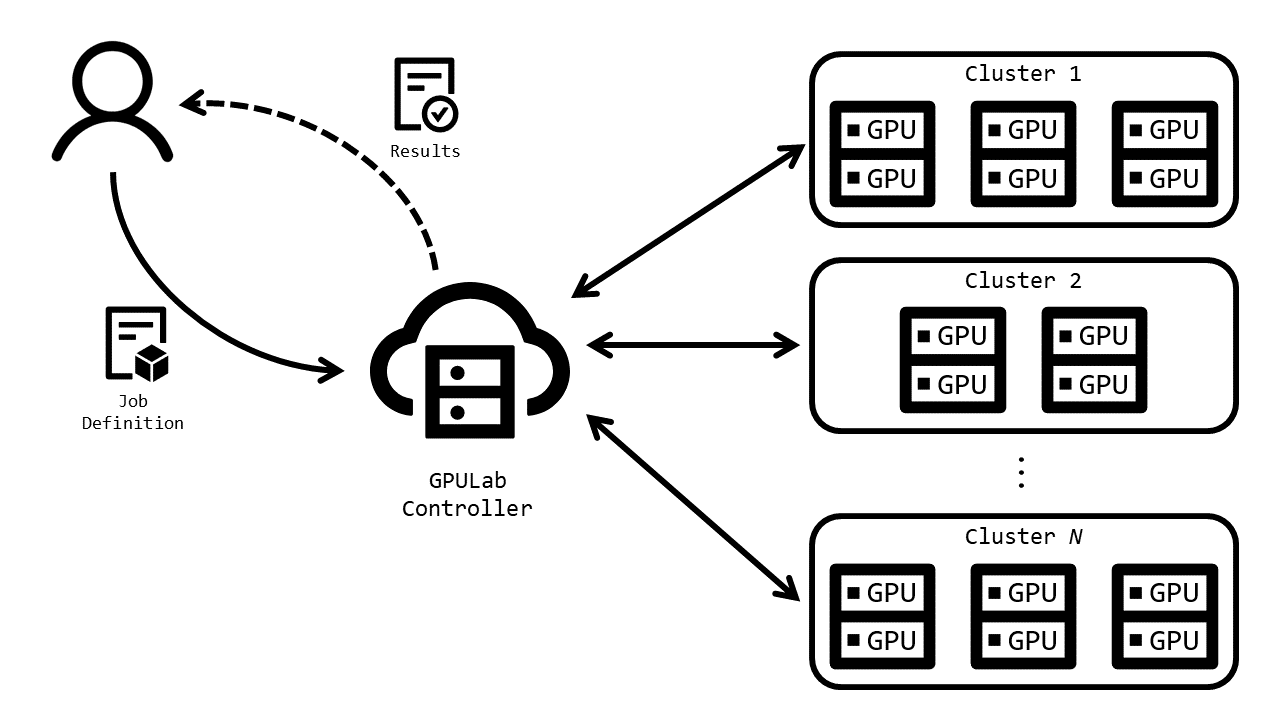

GPULab is a distributed system for running jobs in GPU-enabled Docker-containers. It consists out of a set of heterogeneous clusters, each with their own characteristics (GPU model, CPU speed, memory, bus speed, …), allowing you to select the most appropriate hardware. Each job runs isolated within a Docker containers with dedicated CPU’s, GPU’s and memory for maximum performance.

Some key numbers: